I’m sure most people have at least heard of the term “Moore’s Law” thrown around in computing. Basically, Moore’s Law, created by Gordon Moore, dictates that roughly every two years, the number of transistors in a circuit doubles. You can read more about it here.

This law can be observed primarily in the semiconductor industry in which the primary way to increase microprocessor/processor speed and efficiency is by adding more transistors. This is why you hear CPU manufacturers brag about their new “5 nm” process that their new chip boasts (see image below). Basically what they are saying is that they are able to make the size of transistors smaller, which in turn, allows them to fit more of them in a CPU package.

Credit: Apple

Credit: Apple

So you might be asking, “What’s the problem with Moore’s Law?”. Well, I’m not going to go into the details about it, as major news outlets and other people have definitely explained it much better than I could. But I did notice a very interesting exception to this law. And that is GPUs.

When most people hear of a GPU, they probably think of NVIDIA. NVIDIA is primarily known for its GPU lineup which is popular among gamers, data/computer scientists as well as almost anything related to digital media. And you can tell. As of the end of 2022, NVIDIA has a staggering 82% GPU market share.

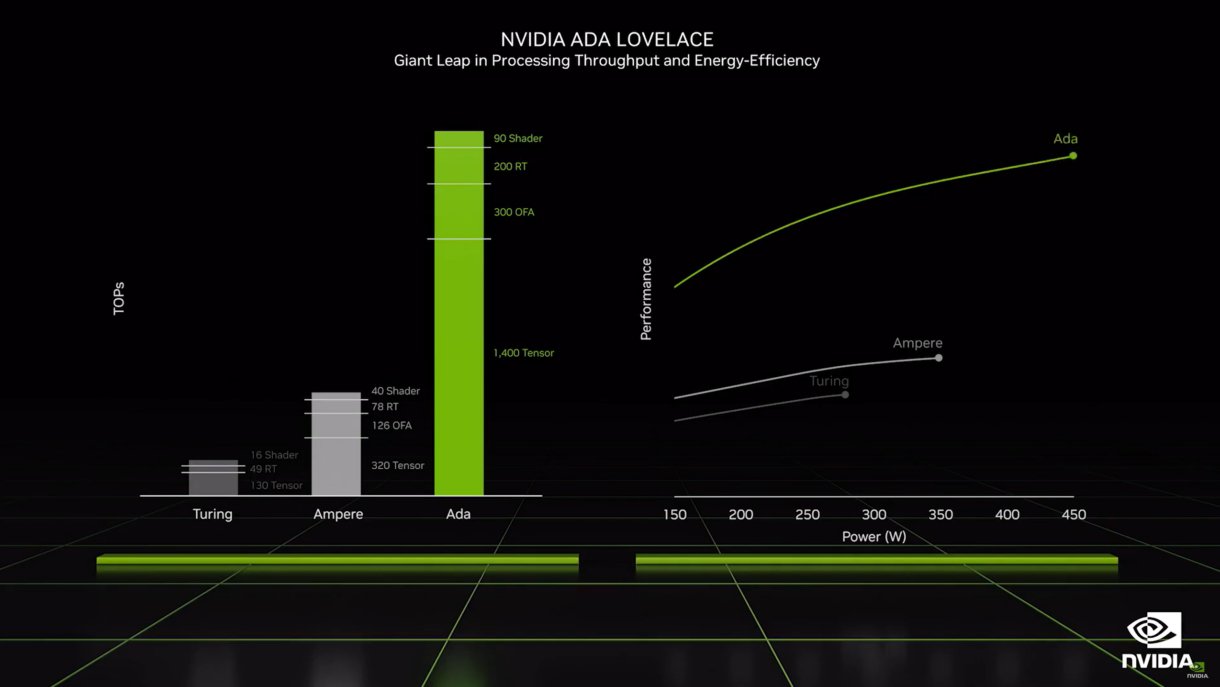

The thing with NVIDIA GPUs is that every two years, their new GPU lineups crush its predecessor, but not by double the performance, its performance increases by more than double. This can be seen in NVIDIA’s graphic below (of course these graphs are somewhat misleading, but the idea is apparent).

Credit: Nvidia

Credit: Nvidia

This has been a pattern observed by many experts and has become the backbone of a new law dubbed “Huang’s law”. Named after NVIDIA CEO Jensen Huang, this law questions Moore’s Law and puts forward the theory that NVIDIA’s GPU performance nearly triples every two years. This, of course, is a theory that has been a controversial topic in the semiconductor industry which you can read about here.

It’s quite interesting how the modern computing world has evolved to the point where new laws are being introduced and old ones are either being retired or adapted.

I wonder where the computing world will end up in the next decade.